Back on May 18, Reid posted a great post on how you should set up your Power Queries using referencing. It’s a technique that I’ve been preaching on my blog for years, and is something that we teach in the Power Query workshop as well. I mean, let’s face it, it only makes sense, right? Connect to the data source once, and re-use that information over and over again as you spin off different shapes for the rest of your tables.So what if I was to tell you that – from a performance standpoint – this is only actually a good practice in Power BI desktop, and probably the worst thing you could do to your Excel model? That doing this will drive Power Query refresh speeds down? How’s that for a click-bait paragraph? Let’s take a look…

A sample model

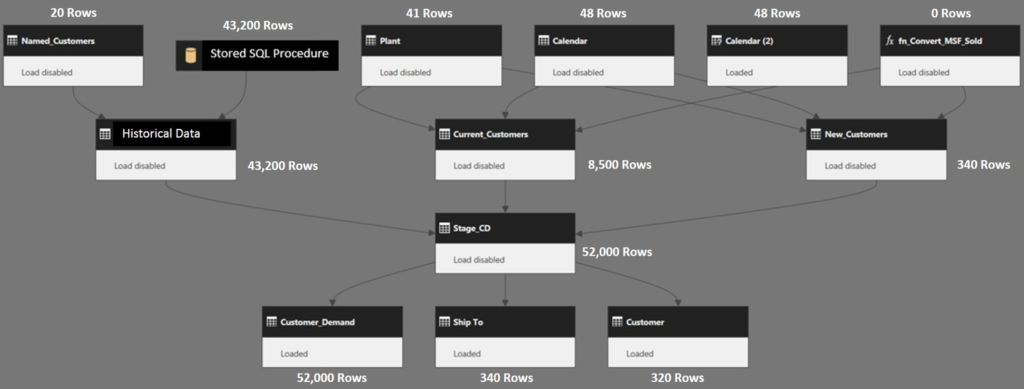

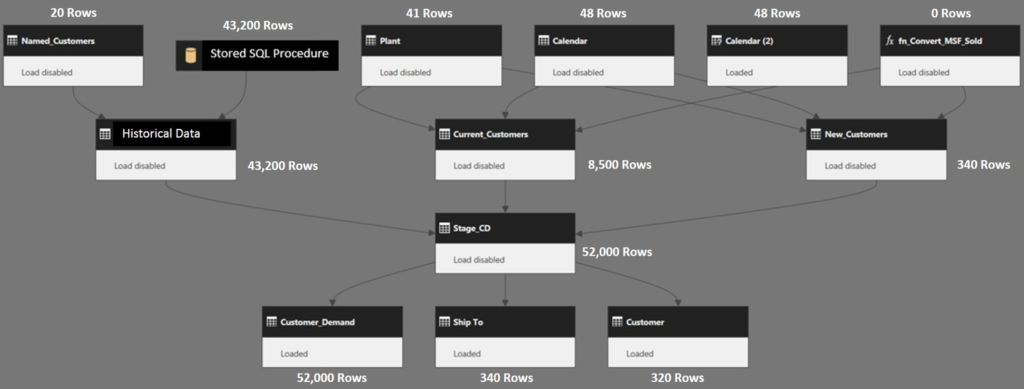

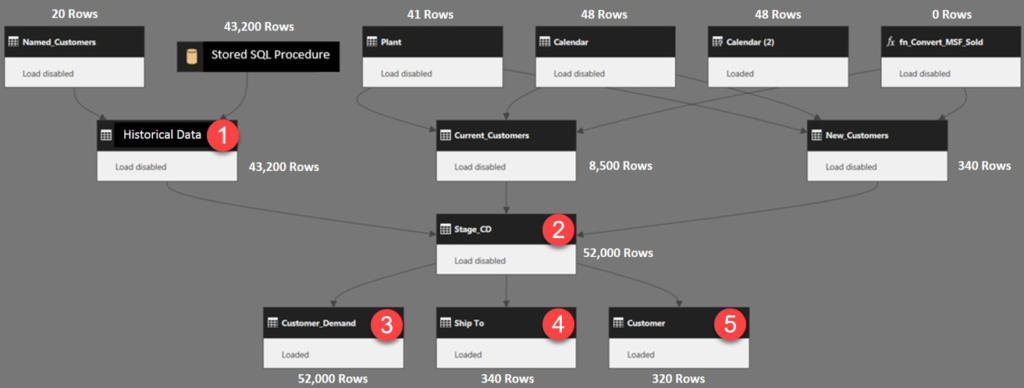

If you’ve been working with Power Query for any amount of time, you’ll start building models that look like this:

In fact, this is based on a real model that one of my clients built. Pretty normal really; it pulls data from a SQL stored procedure, 4 excel tables and a custom function. Many of us build models like this.Now, let me ask you a skill testing question… when you click Data –> Refresh All, how many times is the stored procedure executed?The answer is 1, right?

Trust but verify…

My client sat down with his IT department (he is lucky enough to have one that is super cooperative) and worked through some performance testing. The catalyst for this was that the 52,000 row model was taking ages to refresh. The time was sufficient to kill his ability to build and deploy Power Query solutions as the users just wouldn’t accept that kind of latency.Here’s how they tested:

- The Model was built in 64 bit Excel 2013 using Power Query, feeding directly into Power Pivot Data Model. The data was then landed from the Data Model into a Pivot Table.

- That same workbook was imported into Power BI and an equivalent Matrix visualization was created with the same information as the pivot table in the Excel version of the model.

- Each model was refreshed several times. They timed the total refresh time with a stopwatch (since there wasn’t really another way to do it) and used SQL Sentry to log the number of times the stored procedure was called.

- To account for human latency in the stopwatch start/stop, this test was repeated several times with the results being averaged.

What they found was… disturbing to say the least.

Power Query refresh test results

Here are the average power query refresh times that were logged for this model:

| Program | Refresh Time |

| Power BI Desktop | 9 seconds |

| Excel | 75 seconds! |

That is absolutely insane! Excel is over 8 times as long to refresh the same data!Imke Feldman made a mention of this in her blog post Performance difference between Excel and PowerBI with M is huge! While the angle of her post is very much how impressed she is with Power BI, I have to ask the opposite question: Why does Excel Power Query performance suck so badly? These two technologies are supposed to be based on the same architecture, aren’t they? So why are we celebrating Power BI’s greatness and not screaming about Excel’s abysmal performance?The more telling issue actually shows up in the SQL Sentry monitoring of the Stored procedure calls:

| Program | Stored Proc Called |

| Power BI Desktop | 2 times |

| Excel | 6 times! |

Seriously? How does this even make sense?To be honest, I was actually shocked to see that the stored procedure is called more than once in Power BI. I would not have expected that at all, and would have assumed that the SQL Stored Procedure would be executed once since it is only referenced by the Historical Data table. The only thing we can fathom here is that it is called once for previews, and once for the actual load… or possibly that privacy levels may force something to be called twice. I’m not 100% certain, except that we now know that it is called at least one more time that we think would be necessary.But Excel? Why is Excel calling that stored procedure six times? That makes no sense at all…Why don’t we assign a number to each of the queries that relate to the SQL Stored Procedure:

Adding one to the total on the theory of a preview, we get to six. Unlike Power BI, in Excel a model refresh re-executes each child query in full, never caching the results. No wonder the performance is horrendous!To solve this issue, my client has had to start flattening his query structure… something that is painful and become impossible when you run afoul of the formula firewall. And that’s the biggest rub here. In order to follow best practices and avoid formula firewall issue, you are SUPPOSED to build queries as shown above. And yet your performance will be crucified for doing it.Worse, I have no way to believe that this does not ALSO happen with all Excel tables in the model. Unfortunately we don’t have a software like SQL Sentry that works on Excel files…

Another Model

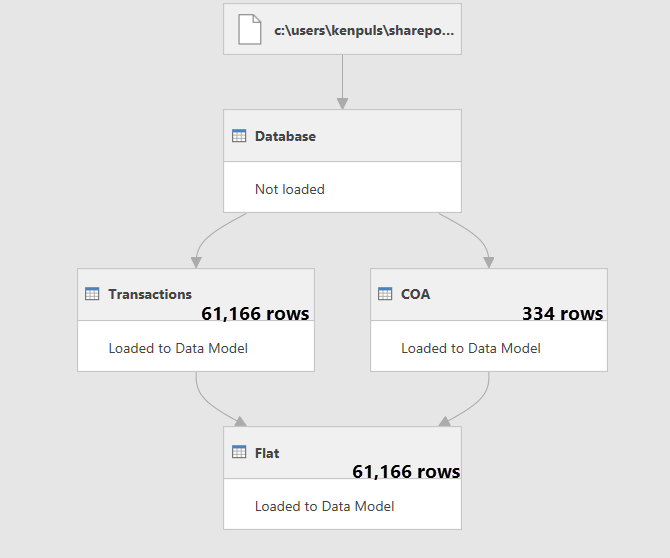

Despite the fact that we can’t trace internal Excel refreshes, we can draw some conclusions based on power query refresh times. Here’s another model to consider, which is built using a method similar to that which Reid posted about. In this model, I connected to an Access Database using a Connection Only query. I then referenced the “Database” query and:

- Loaded the Transactions table to the Data Model (61,166 records)

- Loaded the COA table to the Data Model (334 rows)

- Merged the two into a table called Flat and loaded this to the data model (61,166 rows)

Graphically, it looks like this:

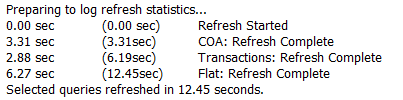

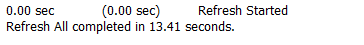

I then used VBA to time the refresh time of each individual query, with these results:

“Now wait a minute…” you might be thinking, “you are calling each query individually, so of COURSE it’s going to refresh the child queries!” And that is absolutely true. So then I compared it to how long it takes to run Excel’s Data –> Refresh All command. In an ideal world, that should re-use the Transactions and COA tables that were loaded, correct?

Um… Oh. It takes a fraction longer even. Possibly because it needs to find the precedent queries? I’m not sure, but what I can say is that it is plainly re-calculating each table as the total refresh is greater than the sum of the tables done individually.

Interested in Learning How to Do this Kind of Thing?

Our New Online Power Query Course just launched this year. You’ll learn Power Query from the best in the business, two Excel MVPs Ken Puls and Miguel Escobar. We’ve included 7 Hours of Self-Paced Video over 31 Courses, with lots of example files, and a Digital Badge powered by Acclaim.

CLICK HERE to claim your spot.

And lest there be any doubt…

This is by far the most frustrating thing here… my client reached out to Microsoft Premier support. (They are big enough that they have a dedicated support rep and premier support tickets.) It’s took a while, but they finally received this email back from the support team:

The performance difference is expected. Excel isn’t running all of the queries in the same cache context, so if two different data sets are based on the same source data then it can’t take advantage of local caching and this is by design. Power BI Desktop runs all of the queries for a “refresh all” inside a single cache context, which would explain why you see the stored procedure being called less often.At this time, there is no mechanism that the refresh time in excel can be improved.

Honestly, I don’t believe that to be the case here. I’ve been discussing this issue with Microsoft program managers and engineers for some time. This was an issue in PowerBI Desktop, and they fixed it. The fix just needs to be ported into Excel is all, but they haven’t been able to get it done yet.I don’t fault Microsoft for building new features. That is their job, and their company success is based on selling more licenses. I get that. Microsoft wants us to use Power Query, and constantly adding easier ways to clean up crappy data is a huge hook in the subscription model. The insider builds of Excel are already hiding the classic data connectors and making Power Query the default Get Data experience – something I think they should have done with the initial release of Excel 2016.When I talk to users they are blown away by the ability of Power Query to transform and clean up their data. It’s only recently that I’ve started getting more and more questions about power query refresh performance. I’m convinced that this is because the user base is still small, and many users just accept the awful performance because they can get cool stuff done. And that’s part of the reason Microsoft hasn’t been able to justify this. If it was your company, would you fix a performance issue that few people recognize, or develop new features to attract new users to your product? But it’s time to change the priority here. Why build all these amazing features and push them if the audience just ends up abandoning them because the refresh time is too long? To me the fact that child nodes are not cached is the number one issue facing Power Query today. I love Power Query. I don’t think anyone who knows me can say anything else. And I love the monthly update cycle that continues to deliver new features and improve the product. But believe me when I say that I would happily stop ALL development on Power Query for two years in order to get power query refresh fixed. It’s that big a problem. I’ve already seen clients abandon Power Query solutions due to the refresh times, and several others who avoid this great tool at all costs due to the refresh latency. Hanging out at the Ask the Experts booth at the Data Insights Summit, 75% of the people that came to talk to me were primarily there to figure out how to improve the performance of their queries.

So what can you do to improve Power Query refresh?

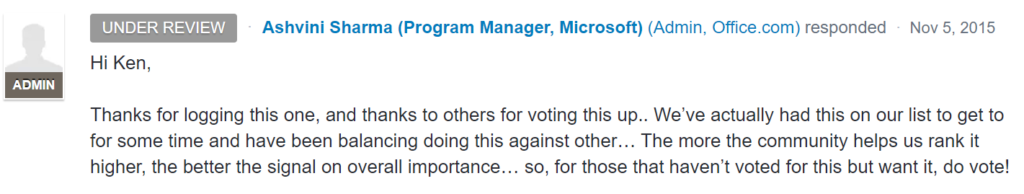

Help us raise this as an issue with Microsoft. You can do that in two ways:Most importantly: Get everyone you know to vote for this item on Excel Uservoice. This is one of the primary vehicles to get our voices heard by the development team. They are listening, and this idea has been marked as under review BUT IT STILL NEEDS MORE VOTES. In Microsoft’s own words:

I’d like to see better power query refresh as the #1 requested item on Excel Uservoice, and we have a long way to go to get there. But wait, you say… it’s marked as “Under Review”! Why do we still need to vote when it’s under review? Let me answer that by pointing you to this idea to make working with Pivot Tables easier (which you also may want to vote up.) It’s an idea that I KNOW they want to implement, but it’s been under review since Nov 2015 and still hasn’t met the bar:

I talked to a couple of the team members at the Data Insights Summit, and they just haven’t had enough votes to signify that power query refresh in Excel is more important than some of the other things they are working on. Notice in the posts, they ask us to keep voting. The more votes we get, the easier it is for the teams to justify the cost of the fixes and new features to the ultimate approvers in Microsoft.That’s the really important thing to do here; KEEP VOTING. And if you’re really passionate about these (or any ideas), get your friends to vote. Get your family to vote. In fact, get anyone else that will listen to you to vote!

Voting Links

Get in touch with a P3 team member