episode 181

What Drives Costs for Data Projects, and How are They Changing Over Time?

episode 181

What Drives Costs for Data Projects, and How are They Changing Over Time?

What if we told you that, before Power BI, most of the money spent on data projects didn’t go where you thought it did? In this episode, Rob Collie and Justin Mannhardt peel back the layers of inefficiency, miscommunication, and just plain bad luck that have haunted data projects for decades. Spoiler: it’s not all doom and gloom. Some of those old headaches are finally getting the boot, thanks to tools like Power BI and the rise of generative AI.

Rob and Justin take an honest look at how data projects have changed over the years, sharing hard-earned lessons and surprising insights from their time in the trenches. They reflect on the glacial pace of old-school BI, how Power BI changed the game, and why AI might be the next big shift.

If you’ve ever wondered why your pre-Power BI data project cost more than a new car, this episode has the answers (and maybe a little therapy). Listen now for insights, laughs, and a sneak peek at the not-so-distant future of data.

As always, be sure to leave us a review on your favorite platform or share the link with a friend to help new listeners find us.

Episode Transcript

Rob Collie (00:00): Hello, friends. All of us spend a fair amount of time heads-down in data projects, whether as business leaders or as data practitioners. And that focused tactical view of the landscape does tend to dominate our mindset for good reason. If we didn't spend most of our time laser-focused on the specific business needs in front of us and on how to precisely address those needs, well, we'd never get anything done. At the same time though, it's quite useful to also take a step back and look at the broader picture. How does all of this look from, say, an economist's viewpoint? If we view the BI industry and related industries through lenses like costs, productivity rates, barriers to entry, inflection points in ROI, and supply and demand curves, that macro view helps us understand things in ways that we would never see clearly while we're staying laser-focused on the micro of individual projects.

(00:59): The macro view is specifically helpful in terms of anticipating the future, but also understanding why the present is different than yesterday. Where has the industry been, where is it today, and where is it likely headed? These are questions you can really only answer through something like the macroeconomic lens.

(01:19): Now, as a brief aside, I'm reminded of an old hockey adage that says something like, "Don't play where the puck is. Play where the puck is going to be." That's a cute saying with a lesson to teach, but in my experience, it's not an either-or thing. If you're on a hockey team that never plays where the puck is, I suspect you're not going to win too many games. You need to play both where the puck is and where it's going to be. So through our professional lens, you need to play both where the industry is today and keep an eye on where it's going to be in the future.

(01:51): This macro lens has already served me very, very well to this point in my life. 15 years ago through this lens, I saw that this little thing called Power Pivot was going to lead the industry to a paradigm shift. I saw that early enough to act on it leading us, P3, to be the largest firm to today dedicated to that new Uptempo, higher-ROI paradigm.

(02:13): So in today's episode, Justin and I return to that lens and in fact even revise and improve upon the picture I had 15 years ago leading to an even more comprehensive view of today versus yesterday than I had even last week. What was it that made Power BI so popular, so impactful relative to the previous wave of tools when viewed through the macro lens of economic cost and benefit? And then with that improved macro lens, we then turn our attention to the future in this episode and see what it tells us about where we all might need to be adjusting our careers and businesses. Pretty cool stuff. We're even going to be leveraging this podcast internally here at P3 with our own team.

(02:54): By the way, there's also a visual that goes along with this episode that is linked in the show notes. It's not required that you have it in front of you, but if you're a visual thinker and learner like I am, you might want to pause now and go look up that link before diving in. Either way though, let's get into it.

Speaker 2 (03:10): Ladies and gentlemen, may I have your attention, please?

Justin Mannhardt (03:15): This is the Raw Data by P3 Adaptive podcast with your host Rob Collie and your co-host, Justin Mannhardt. Find out what the experts at P3 Adaptive can do for your business. Just go to p3adaptive.com. Raw Data by P3 Adaptive, down-to-earth conversations about data, tech, and biz impact.

Rob Collie (03:45): Well, hello again, Justin. On the previous episode with Gil, your microphone, we had some technical difficulties, so I flew solo for that one. So this'll be your first podcast of 2025.

Justin Mannhardt (03:57): Oh, that's right.

Rob Collie (03:58): Welcome to the new year.

Justin Mannhardt (03:59): Welcome to the future.

Rob Collie (04:00): So I'm in my new podcast studio/basement room. It's really cold down here. It's got radiant heat in the floor, and I made the mistake of turning that off. Apparently that takes three days to warm the room.

Justin Mannhardt (04:16): Hm.

Rob Collie (04:17): Big mistake turning that off. And I have the distant pile driving sound where they're driving new pilings out in the lake. I'm getting that background thump. It was too far away today, I didn't even hear it upstairs, but in the basement I can hear the thump. Oh wait, I think the temperature just went up one degree on the thermostat.

Justin Mannhardt (04:35): And you notice that right now?

Rob Collie (04:36): Oh no, it went back down. It's taken 20 minutes to think about moving one degree. So today I thought we'd talk about something that I've been fascinated with for 15-plus years now. When you implement any sort of data related project, like a BI project for instance, where does all the time go? Where does all the cost go and what are all the obstacles in terms of time, in terms of money, and in terms of things that might even make you hesitate to embark on it? I saw the Power BI revolution. I saw that revolution through this lens. This is what helped me very confidently predict the way that the world was going to change in 2010. The prediction proved to be, I think, mostly right. Right enough that the company that I saw building around the change is now P3 Adaptive. That proved true.

(05:33): The new style didn't get adopted so thoroughly everywhere. We still see a lot of really glacially slow projects being implemented by other outfits, not by us.

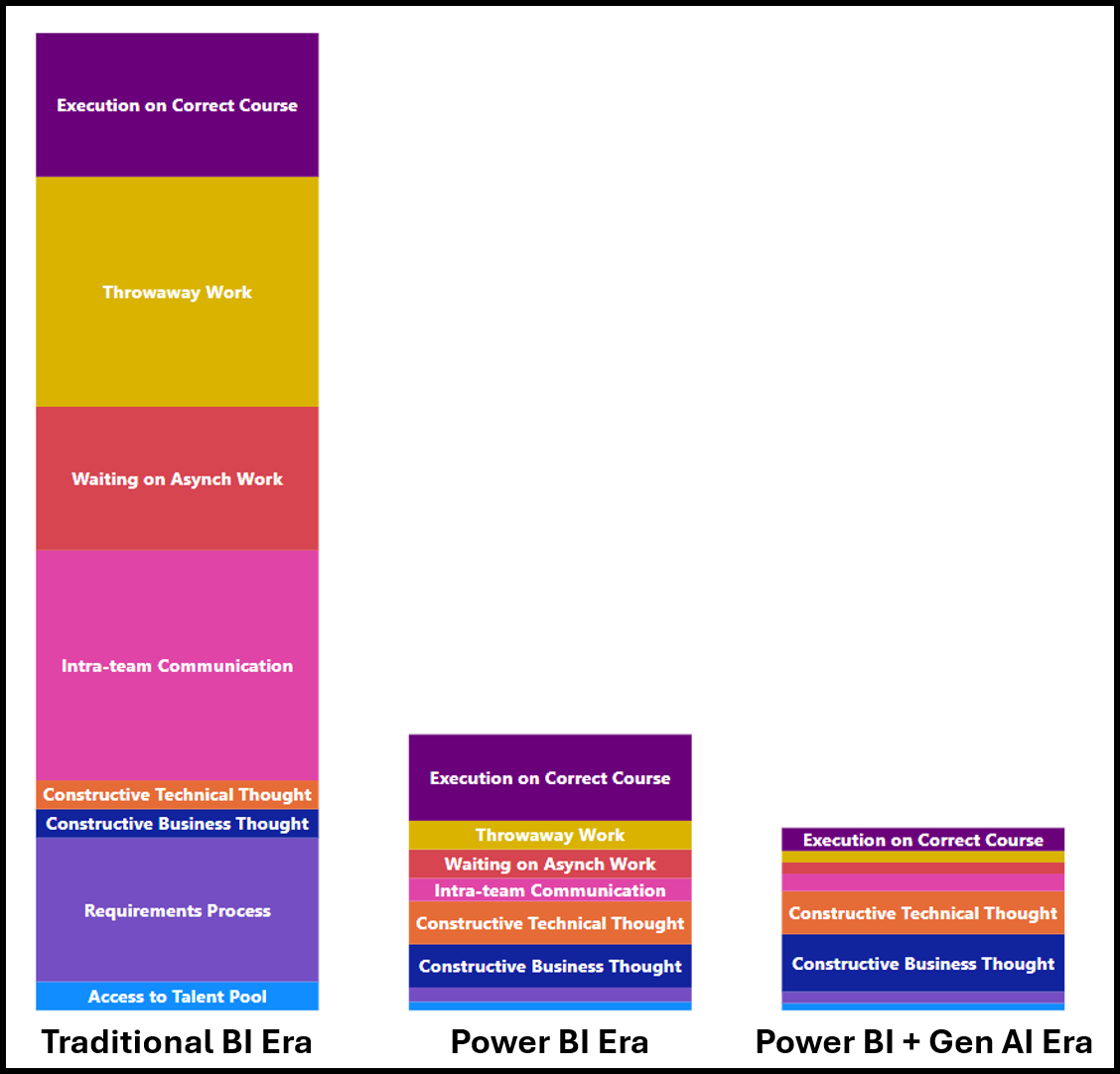

(05:42): The view of where the cost goes. So basically the thing I used to say was that in a traditional BI consulting project, so a project implemented on the pre-Power BI, the pre Uptempo, the old traditional old SSAS, old SSIS, the big heavy metal stuff. I used to say that in the amount of time and money spent on those projects, only 1% of the time, 1% of the money was actually spent on hands-on keys, writing the right code, building the right stuff. 99% was spent on communication costs and doing the wrong work. But if you only measured the time that people spent building the thing and the thing that you kept, that would be 1% or less. That struck people very controversially. It's proven to be true, but I've been returning to that analysis lately. Let's get more detailed with it.

(06:34): If I did this exercise three different times, I'd come up with three slightly different lists, but they'd be similar. I came up with eight places, eight categories of cost.

Justin Mannhardt (06:43): Eight places where the time goes.

Rob Collie (06:45): Eight places where the time and the money goes. I've even modeled this. Not modeled.

Justin Mannhardt (06:51): That term, right?

Rob Collie (06:53): Yeah. That might be a little silly. I've visualized this.

Justin Mannhardt (07:01): I've modeled this in a simulated environment, a digital twin of our business.

Rob Collie (07:05): Not quite that. Again, it's just so striking to me that these days when I want just a simple chart that shows me numbers that I just hand enter, I still go to Power BI as my default choice. I just feel more confident manipulating the Power BI, even just basic bar chart, column chart visuals than I do manipulating the Excel charts. Kind of wild.

Justin Mannhardt (07:26): Yeah, Excel charts are wonky.

Rob Collie (07:28): They've always been wonky. We, the Excel 2017, made an effort to make them far less wonky, and I come back to it. I'm just like, "Oh, I still don't know what I'm doing." I do better than I used to, but there's a visual that goes with this. I don't think you need the visual to follow along with this, but if you want to see the three stacked column charts that go along with this, we'll put an image up and we'll link it in the show notes. Let's start with the traditional era and we'll list the same eight components. The first component is just straight up access to the talent pool.

Justin Mannhardt (08:01): Okay. People with the skills to do the work.

Rob Collie (08:06): People with the skills to do the work. In the traditional era, if you needed to implement a BI project, you needed someone, multiple people actually, you needed a team of people.

Justin Mannhardt (08:13): That's right.

Rob Collie (08:14): Each of whom had invested a significant portion of their lives dedicated 100% to learning that particular skill, to learning that particular tech stack. These people were dedicated IT specialists. They didn't come from the business. They didn't have enough time in their career to learn business at the same time as learning this incredibly arcane set of technology. They also needed a tremendous amount of patience to learn it as well, because even just learning it was slow. There were often steps where you would try something and you have to wait an hour to see if it worked.

Justin Mannhardt (08:49): Yes. Yes.

Rob Collie (08:50): There just weren't that many people who had the time, the energy, the patience, or even the exposure to learn this stuff. So right off the bat, getting access to the talent pool that was required was prohibitive to a lot of projects. When you did get ahold of it, you paid for it. You paid for it quite a bit. It was kind of a rare and precious skill.

Justin Mannhardt (09:10): Sure.

Rob Collie (09:10): So just access to talent pool. I know that doesn't consume time.

Justin Mannhardt (09:14): If you wanted to do a project, you would need to find the talent whether you had it on staff or not. You still needed to create capacity there.

Rob Collie (09:24): Yeah. So it's definitely an obstacle, and it was definitely a cost. In terms of time maybe, but if we want to be really nerdy about this, we could say that the eight steps are labeled zero through seven, and step zero was access to talent.

Justin Mannhardt (09:35): Yes.

Rob Collie (09:36): Then the second one, second cost-driver and place to choose up time and money is the requirements process.

Justin Mannhardt (09:41): Oh, yeah.

Rob Collie (09:43): Again, because of the way these tools worked, you had to go and at least attempt to make all the decisions in advance. You had to go in so precisely, you thought anyway, so precisely specify what the end result needed to do, and you needed to do that upfront. You couldn't just go start figuring it out iteratively with the way that the old tech stack worked. That would just go forever. Literally that would go forever. So having a big robust requirements process was not dumb.

Justin Mannhardt (10:14): It was a necessary evil.

Rob Collie (10:15): Completely necessary, but it was massively expensive and even as expensive as people thought it was even worse. It took a tremendous amount of time. Imagine how much time, even from the clients, the ultimate stakeholders. The stakeholders probably put more time into the requirements process even than the implementers, the consultants or whatever. You don't get to bill, as the stakeholders, the people who are paying for it, you don't get to bill the consulting firm or whatever for the time that you spent educating them on blah, blah, blah.

Justin Mannhardt (10:47): And then the requirements themselves become this religious instrument throughout the lifecycle of the project that is so perverse at times.

Rob Collie (10:58): Yeah, we'll send a team of three to do discovery for two weeks. Like, "Ugh," right?

Justin Mannhardt (11:03): Right.

Rob Collie (11:03): You're not only paying for six person weeks of consulting time or whatever, you're also tied up. You, the person who needs the thing. The business stakeholders are tied up for a big chunk of that two weeks. Then it keeps rolling. It's not done then. But as you pointed out, this isn't like even when you quote unquote, "Are done with the requirements process," you're not done paying for it because it does keep costing you later. Like, "Oh, well, we'd have to go back and revisit the requirements then, won't we?" Oh, you're imprisoned-

Justin Mannhardt (11:29): That's true.

Rob Collie (11:30): ... by your own requirements. The requirements process was necessary, but incredibly costly.

Justin Mannhardt (11:36): Indeed.

Rob Collie (11:37): That's one and two. Three and four are, list them very quickly together, even though they're different kinds of things. I'll have them listed as constructive business thought and constructive technical thought. Constructive thinking about what the business actually needs. Constructive thinking about what we could do for the business, what really should we build? These aren't necessarily in sequential order, right? Of course you'd be doing constructive business thought at the very beginning before you even conducted requirements. But I wanted these two listed sequentially next to each other because it's really important to call out the amount of actual thinking about what you need, what you should build, and then the technical type of thinking, how it can be built. There's a little bit of a spoiler alert, I think we actually spend a bit more time on this stuff today than we did in the traditional era.

Justin Mannhardt (12:29): I assume, potentially incorrectly, this is part of what your thesis is here. In the traditional era, even go back to the previous time sync of the requirements process and even the subsequent implementation process, because of the difficulty and legacy technology, everything felt like it was getting constrained down to best practices. "We do it this way and we just follow this process," and that created a void of constructive thinking. Rob wants to build a car. Okay, what kind of tires does he want? And nobody's saying, "Where is Rob trying to go?"

Rob Collie (13:01): It's real easy, especially when technical experts are involved, and especially if that technical domain feels a bit like a priesthood, it still does in many circles. It squeezes out constructive thought and the technology itself squeezed out constructive thought. The analysis services multidimensional was not receptive to your creative problem solving really. It went right along with the priesthood philosophy.

(13:29): But there's another twist to this as well, which is that constructive thinking wasn't just resisted by the mentality or resisted by the technology. It was almost also dis-incentivized because if you thought of something good, it was just so expensive to pursue it that you kind of just didn't want to even think about it.

Justin Mannhardt (13:48): Yeah.

Rob Collie (13:48): The only thing that lay down that road, down the road of constructive thinking was disappointment and frustration. Like, "Oh yeah, that would be really good, but we're not going to do it." No one wants to experience that and so you pretty quickly learn not to touch the hot stove, even though it's not red, you know?

Justin Mannhardt (14:04): Yeah.

Rob Collie (14:05): That's categories three and four, constructive business thinking, constructive technical thinking. A big consumer of cost, time, et cetera, was intra-team communication. I mean specifically mostly between the technical experts building the thing. You had your data warehouse, your ETL people-

Justin Mannhardt (14:26): Business analysts or the admins on the source systems. Yeah, the whole gamut.

Rob Collie (14:31): ... and if you draw a diagram with two technical experts in it, there's one communication pathway between those two people. If there's three experts, there's three communication pathways. If there's four experts, I've got to do some math. We're up to six, I think. It expands quickly.

Justin Mannhardt (14:50): Yeah, the exponents get going fast.

Rob Collie (14:53): If you have seven experts, it's 7 x 6 ÷ 2. There's 21 pairs and heaven help you when you start considering the subsets that are more than two people. Like, "Well, we need to get three people on the same page here." It just explodes very, very, very quickly. A lot of energy, a lot of time, even waiting on people to respond to direct messages. There's just so much time lost in intra-team communication.

Justin Mannhardt (15:18): Even what amounts to innocent, subtle misunderstandings of things.

Rob Collie (15:24): Yes.

Justin Mannhardt (15:25): It just happens in communicating with people in general. I had an experience earlier today where we all talked about something as a team last week and we all left saying, "Yeah, we're on the same page," but two people heard slightly different message that are very understandable why. And so then you have to spend time resolving that, and that's very hard on a very technical project.

Rob Collie (15:47): Even if the communication were perfect and error-free, getting a team of that many people on the same page and keeping them on the same page and waiting for each other to communicate takes a long time even when the communication's perfect. The next rule of course is that the communication is never perfect. It is far from perfect. Every additional person on the team, every additional communication pathway just represents that much more surface area for misunderstanding, miscommunication. I account for this later in category seven. Right here in category five, intra-team communication, I'm still allowing for the possibility that it's perfect. We'll pay the piper later in category seven.

(16:28): Category six is waiting on asynchronous work. Again, because the tools worked a certain way-

Justin Mannhardt (16:35): Yes.

Rob Collie (16:36): ... you had to, again, specify, clarify, et cetera, what you want, and then someone has to go off and spend a long time in the dark cranking on the thing behind the curtain, off camera. In the meantime, other things wait. The waiting time isn't always necessarily billable, but in some cases it is. Some cases, one technical person's waiting on another technical person and it just kind of has a way of chewing billable time. But for the business that's waiting on the results, even just not having the solution is a form of cost. If you could have the same results in three months versus six months, mm, guess which one cost the business more, even if they're the same dollar figure. The tools forced a lot of asynchronous work.

Justin Mannhardt (17:17): And little storms of these re-engineering cycles. We have to organize the data warehouse first, then the SSAS guys and gals can do their thing, and then the reporting guys and gals can do their thing. You think, "Oh, we'll figure out how to get it going in a bit of a parallel track." Oh, we have to go backwards and then we're waiting again, and now we've got all this lost unused time going on, and it's like, "Oh, no."

Rob Collie (17:42): I love how these topics, we can't even get through them linearly because they keep trying to pull category seven in. Category seven is wasted work, throwaway work.

Justin Mannhardt (17:54): Throwaway work.

Rob Collie (17:56): Work that you did that it turned out was the wrong work, and it is everywhere. In some sense, we like having eight categories. We like being able to label them one through eight or zero through seven, so that's what we do, but this is a soup. It's not eight rock layers in the geologic crust of the earth. It's not like that. It's a cycle of waste.

Justin Mannhardt (18:20): And throwaway work, and it's why I think you're saying it's hard to avoid it in even the other candidates because almost the amplifier of everything else. Because you realize, "Oh, this iteration of the thing isn't right. What happens now? We go back to the requirements process. We've got more intra-team communication, we've got more weighting." It's like the power-up on everything else.

Rob Collie (18:46): You know what it is? I can see this visual so clearly now. It's a game of chutes and ladders where every ladder goes up one square and every slide, every chute goes back 10.

Justin Mannhardt (18:58): Because that's what would happen. Oh my gosh, I'm just having flashbacks to ugly projects.

Rob Collie (19:03): Yeah, there's just so many loop backs that lead to more of the cost-driver categories that are quote unquote behind us on the list. Everything magnifies everything else.

Justin Mannhardt (19:16): In my career, I probably came in to data at the tail end of this era. I was working on these projects a couple of years before Power BI really started to take off, and my point of view is that the industry at large and businesses were trying to be proactive about the throwaway work amplifier by just trying to do more and/or better requirements process. It always went back to that beginning of, "We don't want to have all this downstream implication, so we're going to get it right on the front end." Which is it's an impossible ask.

Rob Collie (19:50): Yes.

Justin Mannhardt (19:51): It's not realistic.

Rob Collie (19:52): It's a really easy disciplined lie to tell yourself-

Justin Mannhardt (19:56): Right.

Rob Collie (19:56): ... that, "This time we're going to nail the requirements." No, you're not. You're just not. This comes back to not only is the process of these projects fundamentally one of discovery, it just is. You have to discover the nature of the problem. You think you know the problem, but you actually don't. You don't really know the problem until you truly engage in solving it in a committed way. Then you get to understand the business problem. You were right that there's a problem, and you're right about the problem and the need in broad strokes, but you inevitably discovered that, "Oh, there was so much nuance that was lurking." Again, the only way to discover it is to try to solve it.

(20:35): Then you add to that my other principle, which is that human beings never know what they need until they've seen what they've asked for, which is really kind of the same thing. "Oh, now that I've seen the chart that I thought I needed, I realize immediately, no, no, no, no, no. I need it broken out by a different way." Even building the visual for these eight categories, there was a degree of iteration. I could not have specified it ahead of time and gone and succeeded, executed on that spec, and that was the simplest thing in the world. I wanted three stacked column charts, and even that, the specification, the requirements evolved.

(21:08): The eighth and final category that I identified, and I've changed my mind a little bit about this as a result of repeating this exercise today relative to 15 years ago, I have decided to become more gracious about the percentage of time that was spent on the actual work executing on the quote unquote, "Right path." Building the right thing, the final thing. Whereas I used to say that was 1%, I think years and years of Power BI have kind of taught me that there's another phase of executing on the right path that I used to not give enough respect to, which is just following through. In the Power BI world, imagine you get the data model right or the semantic model, it's quote unquote, "Finalized." I know it's always a work in progress, but now even just executing all of the reports, there's a degree of elbow grease involved in that. Aspects of this are getting better over time. Calculation groups have made certain things a lot faster. It used to be like, "Oh, now I've written the year-over-year Delta percent measure-"

Justin Mannhardt (22:09): Yeah.

Rob Collie (22:11): ... "for revenue." Now go copy paste that 12 times, 15, 30 times for all the other base measures. Some aspects of turning the crank have gotten better, some of them haven't. Then there's the inevitable iteration on the reports. You test them out with the users, with the stakeholders, and you find out X, Y, and Z needs to be improved or could be improved or whatever. Even in the Power BI world, while we're much, much faster getting through those first phases, I have to give that credit to the prior era as well, that there was a lot of follow through. Again, assuming that you ever got there, which you and I kind of both know that we never really did. The world never really got to a successful BI implementation. They just reached the point where they were exhausted and called it good. But there's still some amount of execution follow through.

(22:56): I think it's more than 1%, but there's still much less time spent executing on the correct course. That is a lesser cost by far than many of the others. I have it visualized here as quite a bit less than the throwaway work, about the same as waiting on asynchronous work. The thing that you would think the main cost-driver, main tour of time is in the end, maybe only 10%.

Justin Mannhardt (23:20): Yeah, I agree with what you're saying. There was a lot of time spent doing the right thing or trying to do the right thing and ending up with things that were useful. But the surface area of usefulness did get constrained within a company. Maybe you got really good high-level management reporting, but you could never get something that was useful down in the ranks. I vibe with that conclusion.

Rob Collie (23:42): All right, so let's step forward to the Power BI era. For the purpose of the Power BI era, let's assume that we're talking about doing it the right way, the way that P3, honestly, the way that we built the company to do it that way. Not the way that it is very often used. Power BI is still used, I think unfortunately, very, very frequently within a at least semi-traditional framework. Like you mentioned before, and many times you came here to this company because you wanted to do it this way.

Justin Mannhardt (24:10): 100%. I wanted in on the, "What is this Kool-Aid?"

Rob Collie (24:14): Let's do that. Because we've listed the eight categories, this can go quite a bit faster, I think. We just talk about it in terms of what change for each category.

(24:23): The first category, access to talent pool, well, that became less expensive.

Justin Mannhardt (24:26): Yeah, more people can get in the game or acquire the skills at a lower barrier.

Rob Collie (24:32): Absolutely. Definitely made a difference in my visual. It becomes skinny enough that the data label is no longer visible.

Justin Mannhardt (24:38): Yep.

Rob Collie (24:39): You can see the blue bar, but you can't see the access to talent pool label anymore.

(24:44): Same thing with the requirements process. The requirements process drops to a, it doesn't drop to zero-

Justin Mannhardt (24:49): Right.

Rob Collie (24:50): ... but it drops to a very, very, very thin little slice relative to what it was before. You need to spend a little time aligning between the builders and the stakeholders on what the human plane goal is. You talk about it as human beings. You're not drawing ERD diagrams. You're not listing out all the columns that you need and all the ... You're not doing that. It's more like a sketch on a whiteboard or, "Here's a dashboard we need." The exercise that we do often in our jumpstarts with the sticky notes, what would be good wins, et cetera. By the way, its percentage factuality is incredibly high. Not mis-specifying things when you're operating at that level. At that level is where you're most confident.

Justin Mannhardt (25:32): Yeah. The real tangible comparison, you mentioned before bringing in a small team of experts for two weeks. That was super common. ERD diagrams and data dictionaries. That two weeks is now, "Let's spend, I don't know, a few hours."

Rob Collie (25:49): That's two weeks of discovery with a team. Then they go and spend more weeks writing it all up.

Justin Mannhardt (25:55): It's not as if requirements aren't happening. It's just so much more integrated into the process of building the thing we think will help us. It's not necessary to spend it that way.

Rob Collie (26:06): Yeah, because the synchronous much faster time that it takes, we're kind of cheating our way in down the list of categories as usual, but that's how the process works.

(26:15): Quote unquote, "Bad news." There were two areas of Power BI projects where I actually think we spend more time than we used to. We spend more time on constructive thought, both constructive business thought and constructive technical thought because it's not dis-incentivized and it's not resisted. The technology doesn't resist it. The priesthood that resists it has sort of been sidelined, at least in these environments where you're doing it this way, almost by definition you've sidelined the priesthood. That's going to be a new saying, sidelining the priesthood. It can mean so many different things. Anyway, thinking, actual thinking that pays off, why wouldn't you put more effort into that if you can?

(26:55): Yeah, there's something that the critics could point to and say, "Hey, look, things got more expensive with the changes. Things got more expensive with Power BI." But only by a little bit, but the ROI on that time is damn-near infinite.

Justin Mannhardt (27:07): That's what was needed, and that's something we've been saying for pushing a decade now. Let's restructure where our time goes, spend more time on critical thinking, constructive thinking, less time just trying to hammer through the mush.

Rob Collie (27:20): If you think about it, that's what the ideal would be. The ideal project would be nothing but constructive thinking that gets transformed immediately into output. That would be nirvana, if you didn't have to spend any time with any of the other technical stuff or coding or building things or whatever. That's what the project should grow to be in a world where tech is absolutely perfect and cost you zero. It would just be the constructive thinking. It's good to see those two categories getting more air to breathe in today's methodology. It's only a little bit tongue-in-cheek that it's more expensive.

(27:58): Okay. Intra-team communication. Well, it shrank again, dramatically.

Justin Mannhardt (28:03): It can go way down and this is the gift of the software. Where you needed four, five, six different experts in different technical disciplines, now one person can have the skills in a single software tool to do all of those same things. It's a gift.

Rob Collie (28:20): Yeah, and be the same person who gets on the same page with you about requirements. In the traditional model, there's the business analysts/solution architects that are really quote unquote-

Justin Mannhardt (28:30): Translators.

Rob Collie (28:31): ... "the people that you talk to. The translators." Translation is also built into this multi-talented, multi-disciplinary skill set that a modern Power BI professional can embody. It's not zero. The stakeholders are still part of the team.

Justin Mannhardt (28:47): Of course.

Rob Collie (28:48): Sometimes it does still take more than one technical expert but the overall footprint is much, much smaller. Given that the total cost of this explodes combinatorially as the team grows, if you shrink the team to a small fraction of traditional size, you've really exponentially reduced the intra-team communication cost.

(29:08): All right, waiting on asynchronous work. There is still some of that, absolutely.

Justin Mannhardt (29:12): Yeah, similar to the communication. There's still times when you need something clarified or you need something done outside of the Power BI environment or you got to wait for some feedback. But it's just not nearly as high of a percentage.

Rob Collie (29:26): I'm actually talking about asynchronous work. There's still times when the expert disappears and goes and builds that new fact table into the data model or whatever. It just doesn't make sense to keep the stakeholders engaged while it's being built, but that asynchronous work is oftentimes 15 minutes. I used to tell people when I was with a consultant, if I was looking at a hard problem, I'd be like, "Hey, y'all, you all could refresh your coffees. I'll just noodle on this." They'd come back and I'd have it not just solved, but also integrated in. Of course 15 minutes is an extreme example of asynchronous work, but it's the exception that sort of proves the rule. Whatever it is, however long you were waiting asynchronously for something to be built before, sometimes you don't wait at all because it's just happening live there with you. But when you do wait, you wait a fraction of the time that you used to.

Justin Mannhardt (30:13): It was like a standard operating procedure for me. When I would do a jumpstart with a client, let's say you spend most of the morning kind of doing the sticky notes and the whiteboard and getting wrapped around the business problems you want to try and attack. Then I'd say, "Okay, I'm just going to be awkwardly quiet for the better part of 30, 45 minutes here and then we'll look at it together. You actually have a mock-up of what this thing's going to look like already." Doing that as a person hands-on-keyboard and coming in at the end of that traditional era, that would be impossible. You'd be like, "Okay, let's all break and we'll come back in a week," if you're lucky, so.

Rob Collie (30:48): Yep. There's still throwaway work, but it's actually a positive thing that there is because all work costs less today than yesterday, so by definition, throwaway work will scale down accordingly. In a way though you're willing to throw away more because along the way you've spotted something better, whereas in the old model you'd have gone, "Nah, we'll keep it."

Justin Mannhardt (31:11): Yeah.

Rob Collie (31:14): Still though, as a total amount of cost, throwaway work, it's almost negligible. It's negligible despite you being more willing and enthusiastic to throw away work because it does cost less to change and you've spotted the opportunity.

Justin Mannhardt (31:27): That's something as we've been talking, I've been looking at your chart. I think you're correct. The time spent on work that gets thrown away goes down, but I think the volume of iteration increases.

Rob Collie (31:42): Yeah, that's right. The population of work that's thrown away, if you look at it as an individual list, that list gets longer. You throw away a longer list of work. Each item on that list is diminishingly small in terms of how much work it actually was that you threw away.

Justin Mannhardt (31:56): I like what you said there in the traditional era, you would just concede to keep something because it was just not practical to attempt that many iterations. You just couldn't. So I think that's a distinction worth thinking about in this too.

Rob Collie (32:13): It is, and it's just impossible to get so many different dimensions and nuance into a two-dimensional visual.

Justin Mannhardt (32:20): Yeah. We need some 4D here,

Rob Collie (32:22): Keep in mind, even if you look at these two stacked column charts, it's not like you got the same results in column two with Power BI at a fraction of the total cost. You got a much, much, much better result.

Justin Mannhardt (32:36): You need a dashboard, Rob. You need a time visual, results visual.

Rob Collie (32:40): Small multiples. We need to just blow this thing out.

Justin Mannhardt (32:45): Yeah, just throw some machine learning at it.

Rob Collie (32:48): Execution on correct course. I think this is, in a way, the one that shrinks the least relative to the traditional era. It still shrinks because everything's faster. But there are some, at least today, there are some parts of the process that just don't really get a whole lot faster. Pixel perfect layout of dashboards as of the time of this recording is still a major pain in the ass.

Justin Mannhardt (33:12): Yeah.

Rob Collie (33:12): It is setting conditional formatting and all that stuff. It's just tedious as hell. Then there's the human component of it like getting feedback and there's diminishing returns in the human plane as tech improves. Human's only going to move as fast as humans do.

Justin Mannhardt (33:30): This is one of those areas where it's one of those dimensional nuances. I'm sitting here wondering, "Do we spend less time executing on the correct course or more time because we've afforded ourselves more time to go down the right path?" Or like you were saying, it's more like the results thing. We get farther down the correct course, kind of another thing that I think is important.

Rob Collie (33:52): In order to make this visual fair, it's only talking about cost. To give the second column credit for the quality of results it produces, I would need to shrink the second column to a fraction of its current height in my visual. I'd have to make all of the slices just so tiny that you couldn't even see them, and even then it wouldn't really capture it. But yeah, the bar of quality of result that that column if we've represented that would be just as mismatched as the cost. The quality of result is so much higher in the second column in the Power BI era.

(34:22): Here's the place, Justin, where I'm sneaking gen AI back onto the menu. I know that we've talked a lot about it, but this is sort of the reason why I'm revisiting this analysis that I performed 15 years ago before deciding to bet my career on a change, is that we're experiencing the signs of another similar disruption. It comes from a completely different direction. Generative AI that does technical work or helps you do technical work, helps you generate code, helps you generate logic. Please, please, please, please, please helps me lay out reports and format them the same way over and over again, please. If I have one ask of the gen AI gods is to make report layout and formatting all of that less tedious.

(35:05): By the way, we're up to 60 degrees in my podcast studio.

Justin Mannhardt (35:10): Breaking news.

Rob Collie (35:12): I think it's taken an hour and a half to move two degrees.

Justin Mannhardt (35:14): Wow. Well, you haven't taken off your jacket yet, so.

Rob Collie (35:17): Oh no, it's staying on for the duration.

(35:21): Yeah, this was the reason to revisit it. I want to view gen AI through that same lens. Also, I think there's a number of reasons to do so. Number one, this worked so well for me before, biggest decision of my professional career, and so it makes sense to go back to the methodology that worked. But also I think because it might help people understand and buy in. If you find yourself today thinking that gen AI is not going to change your job, is not a threat to your job in the Power BI space, for instance, you should be a little bit concerned about the fact that I've heard that before. I heard that last time. Everyone who was in on the traditional philosophy said all the same things. "This'll never be good enough. This will never measure up. The quality control problems will be blah, blah, blah." It's the same excuse-making. It's the same self-comfort. It would be so hypocritical of me, having gone through one of these transitions before and had it benefit me, for me to now play the entrenched old guard. That would be hypocritical and also just really not smart.

(36:30): Viewing it as one continuum, as a series of shifts in this direction, my hope is not only does it help me, but also my hope is that for others, it'll help people understand that it's not the same threat that they think it is. In the same way that I think most traditional BI pros reacted to Power BI as an existential threat, binary, yes, no, either this is going to render me useless or it is useless. Those were the only two choices. Once they subconsciously defined it that way, the question, everyone concluded that the technology was going to be useless, which was wrong. Which is absolutely wrong. A lot of those people, though, that resisted like that are still employed today. They're still doing things. They just happen to be doing things differently. A lot of them just sort of quietly laid down their arms overnight and now and became ...

Justin Mannhardt (37:23): "We've realized we need to get on board with this."

Rob Collie (37:28): Yeah. I don't think it's binary.

Justin Mannhardt (37:30): No. Too simple.

Rob Collie (37:32): I think it's just a continuation of this same historical trend.

Justin Mannhardt (37:38): What's interesting, I was thinking about this the last several days, especially when I hear people say, "AI isn't good at this particular thing yet." Or, "I don't think it's going to replace that aspect of it." There's two reframings I'd encourage people to think about here. So one would be you focus in on something like the DAX language. There's a lot of people out there that say, "Oh, it's not good at DAX yet." Well, there's plenty of examples where it actually is and it's only going to keep getting better. So that's one area of reframing. But then I think the other area of reframing that I've really taken up in some of my own thinking lately is, "Okay, why do we write DAX in the first place?" Because we're trying to address some sort of business problem or need that measure or set of measures is going to give us what we need. The other thing I think is, "Well, can AI do things that make this way of addressing that need no longer the best way of addressing it?"

(38:40): I'm working with one of our customers right now on just sort of a pilot project on building effectively a custom AI agent that replicates certain parts of what an analyst does. It's not like we've got this AI agent that's doing Power BI work. It's executing its own Python scripts and that kind of stuff, but it's still trying to give us the insights we're after. So I think that's the other reframing with the gen AI and Power BI is there's real potential where addressing the business problem or opportunity will be done with different tools and different approaches entirely. So I think we need to be open and mindful to, yes, it can help you with very real, specific things in the ecosystem that we develop in today, but also being mindful that Power BI didn't exist when this all happened for you. I think there are potential for similar things to start happening. I think just being awake and alert to all of that is the most important thing in this era.

Rob Collie (39:41): I agree with you, and I think it's the hardest thing to imagine in all of this.

Justin Mannhardt (39:46): Yeah, right now it is.

Rob Collie (39:48): You think about even Power BI really just ... It was the same types of activities. Everything in Power BI has a parallel in the traditional world. There was a parallel to DAX. There was a parallel to M, There was a parallel to VertiPaq. They were all enormous leaps forward, but in the same categories of technology that had already existed. The hardest thing to imagine is the places where gen AI might just skip some of those or completely reimagine them. We're early enough in the process to not necessarily have great guesses about where that kind of stuff might land. Admittedly, when I put this thinking cap on, I'm thinking about it through the lens of the same stuff as before. I've labeled the third stacked column as, "The Power BI + Gen AI Era." You're right, there might be a fourth column coming, but just for the purposes of this exercise, that's kind of all I can wrap my head around. I'm not to the point yet of confidently thinking in the direction that you're talking about. That's not to say that you're wrong. It's just an admission that I'm not there yet.

Justin Mannhardt (40:55): I think the plus is still right. I like to bring that up because I do see potential that's not getting talked about there. It's very unclear where things will land and what we'll be good at. But if we're purely thinking about AI will be an assist to doing the same types of things we're doing today, faster, better, whatever, we're sort of missing the idea that there are new ways of going about solving problems that keep happening. So I think we need to be mindful of that also.

Rob Collie (41:24): It's a good reminder to me.

Justin Mannhardt (41:26): Yeah.

Rob Collie (41:26): I have, very much for the purpose of this exercise, put on my continuation of the same curve. Again, I think it's a valuable exercise, but there's an implicit premise I've been sitting on, which is, and this is really all I need to do. No.

Justin Mannhardt (41:43): Yeah.

Rob Collie (41:44): There are flanking maneuvers that are coming. They're aren't going to have great parallels relative to the first transition, the first revolution that we've experienced in this game.

(41:55): What do we think of the eight categories becomes more or less expensive relative to before? Let's go in reverse order in a way. I think execution on the correct course will now take less time. There's going to be a sizable reduction in that. I also think that anything that's work is going to be reduced. Throwaway work, waiting-on-asynchronous, work because work is going to move faster in general, those will scale down again. Of course, that leaves more room for constructive business thought. Maybe constructive technical thought, there won't be an increase there because the tools will help us with that.

(42:32): The intra-team communication, again, maybe a little bit less than before. If you're lacking one particular set of knowledge, it's just going to be easier to acquire it. But again, that's kind of in the human plan, so I'm expecting that to be resistant. The net result though, again, if you look at the visual, of course these are approximate, but the percentage reduction in time that I anticipate happening between the Power BI era and the power BI plus gen AI era is less dramatic than transition from era one to era two. Mostly because the human plane of everything has already become a much higher percentage of the cost in the Power BI era. The human plane is the one that is sort of like the place where you're not going to squeeze it as much.

(43:21): Every one of these successive generations is going to, until we get those pesky humans out of the equation, which we're not rooting for, we're on kind of a place where there's less and less to accelerate. Within those things, the acceleration might be dramatic. The net effect of this on the marketplace is significant. It necessitates a completely different way of operating. You need to think of yourself as a professional differently. If you're a consulting company like us, you need to think of yourself and the way you operate differently. You have to make changes and they're not going to be trivial changes. The shift in the columns that the total cost is seismic, even though it might not reduce the total cost or increase the total quality of output as dramatically as the first time.

Justin Mannhardt (44:04): I think this is right. Especially something like execution on current course, companies and customers that I work with are becoming more and more interested in doing something with AI, or they might specifically think, "Maybe we have some custom GPTs or bespoke copilots we could build." And so a question I found sort of entertaining to ask is, "Well, how long do you think it will take to actually build the GPT once figure out what we want to do?" You get this wide range of answers. Is it days, weeks? And then the reality is once you have clarity on what you want it to do, it doesn't take that long, inside of an hour or less time you set this thing up. Where the work goes, and you have it here in the visual, is the constructive thinking.

(44:52): So the pilot project I'm working on now, we're sort of paying for the sloppiness in a way of our past of like, "Okay, if we're going to train an AI agent to perform this analysis, well now we have to finally buckle down and define what the hell it is we're doing because we have to tell it what to do." That's where we're spending our time is making sure we understand that really well to train a model to do something. But even the technology is sort of like this weird mystic art stuff still sometimes. That process doesn't take all that long. I'm talking specifically about the work of building a GPT, which is very different than I'm getting assistance from an AI doing my code and things like that. Again, I think we'll see another jump in the list of iterations. We can entertain on things. I think we'll see another jump on how far down the success road we make it. Those things, while your time might not be as seismic, I wonder, well, how seismic is the value?

Rob Collie (45:55): Yeah. Forecasting is always an imperfect science. A year from now, six months from now, maybe even less, we'll come back to this visual and be like, "Oh, silly us." But hey, it's editable, can change the numbers and add a fourth column. But I'm pretty confident in the broad strokes of this forecast.

Justin Mannhardt (46:16): Yeah. I think the next 12 months are going to be really interesting and will start to bring clarity in answering that how right is this thinking. The last 18 months, all throughout '24, a lot of people woke up and started paying attention to generative AI. I think this is the year where we start to see more and more practical application. What's really taking hold? What's working? How is it working? Because we experienced that between the traditional era and the Power BI era as well. There was early adopters and people that get in fast, and then once it started to take traction in the marketplace, people started to wake up and then a lot more people got into the party and then it became more clear. People started copying the way, so to speak. So I kind of predict that's what happens this year. If I were to make the bold prediction on gen AI for the next 12 months is we get to that type of point.

Rob Collie (47:14): I agree with that prediction. There's going to be surprises too. Those are the things we cannot predict. That's something that we're not used to in the tech world is breakthroughs. Everything that's happened up until this point in our professional lives has been the result of human beings getting smarter about what software should do and how it should be built. Plus, there were some fundamental, just like over time, not breakthroughs, but hardware just kept getting more and more powerful. Over time that quantity of improvement made a qualitative difference in what was possible. You really couldn't build Power BI with 486 processors and 4 MGs of RAM. It just wouldn't have worked. The idea that there can be breakthroughs in technology that just fundamentally changed things overnight, that's new.

Justin Mannhardt (48:02): That has the attention of the whole world. That's very unique, very unique.

Rob Collie (48:09): Yeah. These platforms going from third grade level to post-grad level in their ability to take exams, we're not used to that, and that happened in a year. What's the next thing GPT has released? It was, "Oh, we went to 4." Then they're like, "Now we're onto Os." Okay, wait. What?

Justin Mannhardt (48:30): Why? Why?

Rob Collie (48:31): What's next? We have to be prepared for surprises.

Justin Mannhardt (48:34): Yeah. A lot of people out there talk about hitting the plateau of progress. Again, it's like, we don't know, but I think we're going to get a lot of clarity in '25.

Rob Collie (48:43): Plateau or hockey stick curve? I don't know. We're going to see.

Justin Mannhardt (48:48): We'll have to come back to this one at the end of the year. We'll have to footnote it.

Rob Collie (48:51): I had the forethought to save this visual in a place where I can find it. Solved that one.

Justin Mannhardt (48:55): On to the next problem.

Rob Collie (48:57): All right, well, thank you, Justin.

Justin Mannhardt (48:58): Thank you, sir.

Speaker 2 (48:59): Thanks for listening to the Raw Data by P3 Adaptive podcast. Let the experts at P3 Adaptive help your business. Just go to p3adaptive.com. Have a data day.

Sign up to receive email updates

Enter your name and email address below and I'll send you periodic updates about the podcast.

Subscribe on your favorite platform.