WHAT IS MICROSOFT FABRIC?

Unraveling Microsoft Fabric

Recently, we hosted an exclusive Fabric webinar led by our CEO Rob Collie and CCO Justin Mannhardt. It was a great session unpacking capabilities. But your thoughtful questions made it clear there’s more to explore together. In response, Rob and Justin created this living FAQ as a follow-up resource. Their goal is to provide straightforward Fabric answers and continue the conversation.

Think of the FAQ as extending the webinar and delving deeper into how Fabric can revolutionize your data management in exciting new ways.

If you’re already looking to dive deeper into Fabric readiness, schedule a call.

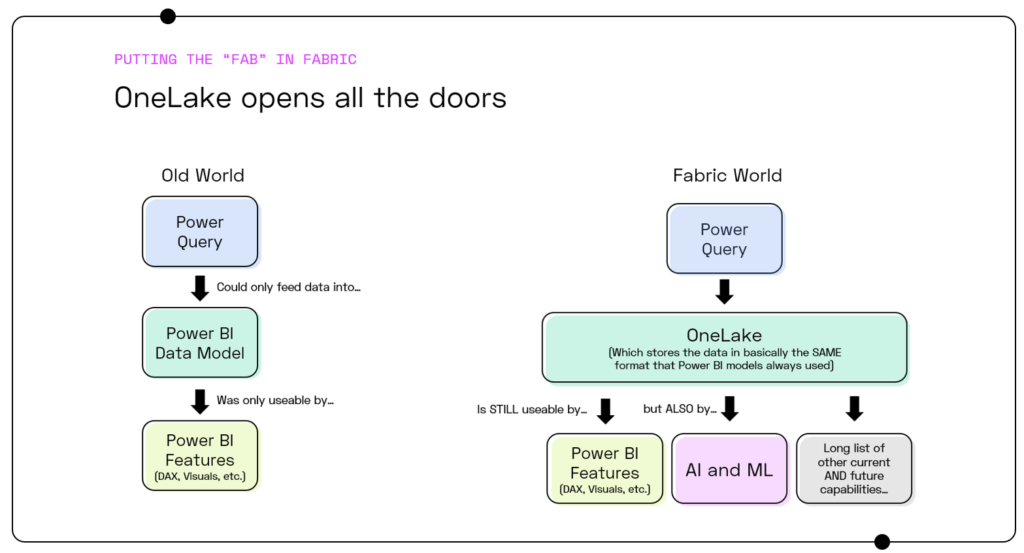

First, we need to distinguish Fabric from OneLake. “Fabric” is itself an “umbrella” marketing name for basically the entire Microsoft data stack – a rebrand of many interrelated technologies – and therefore very difficult to reduce down to a pithy summary. (For instance, Power BI itself is now considered a component of Fabric).

It’s more helpful to focus instead on OneLake, because that’s where the “do” really comes into focus. OneLake is actually phenomenal, and if you understand Power BI, you already have a HUGE leg up on what makes OneLake special.

Here’s the shortest summary:

- In the Microsoft ecosystem, OneLake is now THE place to put data for ALL of your analytical needs.

- Everything in that ecosystem can see and utilize data in OneLake – if you put your data into OneLake, Microsoft is essentially promising you that they will make EVERY feature of their platform able to interact with it.

- Best part: OneLake is built on the Power BI data storage format! That’s right: if you looked under the hood at data stored in OneLake, it would look the same as a PBIX file!

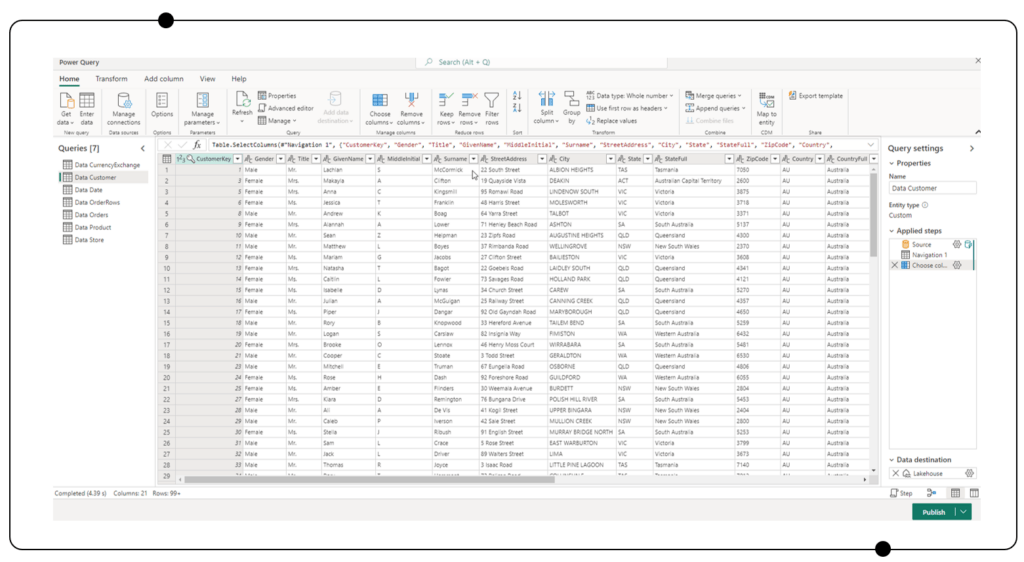

- So all of the steps you take to build a Power BI model today are the SAME things you will do to load data into OneLake in the future. Same Power Query user experience, most importantly, and you won’t notice the difference when the data “lands” in OneLake versus where Power BI used to put it.

- But once you’ve loaded that data, it is NOT “just” available to Power BI – it’s available to every analytical capability Microsoft has to offer – including AI/Machine Learning, but also many other exciting things.

Yep, there is zero new tech to learn. Does this look familiar?

Sure, you can do nothing, and everything will just keep working.

But that’s where the history lesson of Power BI is so helpful to revisit. If you rewind back to before you first discovered Power BI, you probably weren’t too keen on things like “OLAP data modeling” or “in-memory, columnar databases.” Similarly, you might not have even heard of “ETL” before Power Query, either.

Today, though, your organization is benefiting MASSIVELY from PRECISELY those same technologies, because Power BI and Power Query dramatically lowered the barriers to them. Those technologies existed prior to Power BI, but they were so expensive and difficult to deploy that they might as well have not existed for most people.

So, OneLake is now doing the same “dramatic barrier lowering” thing for many OTHER technologies that you have likely, until now, stayed away from.

And just like in the Power BI example, those until-now-unexplored technologies WILL provide massive business value once you start trying them out.

The first and probably juiciest example is AI and Machine Learning. The biggest obstacle to building effective ML models has never been the ML model itself, but prepping the data in the first place.

Imagine, for example, loading a bunch of different kinds of data sources into a Power BI model today. Using Power Query, you connect to disparate things like text files, databases, and cloud services. And then – still using Power Query – you clean and reshape that data so that it’s optimized for analysis.

Now imagine even further that you then realize that you’d like to deploy machine learning against PRECISELY this same data. Should be easy, right?

Nope, not before Fabric and OneLake. Even though the data is just sitting there already, perfectly formatted, in a Power BI model, you couldn’t re-use it. You had to embark on a parallel “data engineering” project to grab that exact same data all over again, using much more difficult platforms like Databricks and Jupyter and languages like Python, R, and SQL.

That was a MASSIVE barrier to entry, and is the biggest reason why machine learning isn’t more broadly utilized than it is today.

In the OneLake world, though, you have zero parallel work to perform. The data that is “powering” your Power BI reports is 100% as accessible to ML as it is to the reports. No data engineering projects needed – because you did all of that already in the course of building your Power BI model.

In short, it often used to cost six figures just to START a machine learning project. But with OneLake, the “data engineering barrier” vanishes overnight.

Data Activator is one that we find particularly exciting. If you’ve ever experimented with Power BI’s “alerts” system, you probably found it lacking. In order to receive an automated alert about some metric drifting outside of normal bounds, you had to pin said metric to a dashboard and then set an alert on it.

But the whole point of alerts is to “notice” things that are DIFFICULT to spot in a report! For example, if you have hundreds of customers and suddenly twenty of them completely stop buying from you, your overall profitability number might not move much. But losing twenty customers overnight is DEFINITELY something you’d want to know about. The existing Power BI alerts feature was 100% incapable of addressing this scenario – so much so that we often invent creative DAX patterns to compensate for it.

Data Activator is an entire new PRODUCT designed around doing alerts the RIGHT way. We should expect a rapid pace of innovation with this tool, with much more to come. It’s very exciting to see Microsoft recognizing this gap in their platform and creating a whole new offering to address it.

Beyond Data Activator… Azure Synapse and the Power Platform Dataverse are two products that we LOVE to work with powering big data analysis and low code applications and workflows. However, we had to fight the battle of moving data into these platforms as well. We can now share OneLake across these workloads as well.

And of course, Microsoft will NEVER sit still, so the biggest examples are things we’ve even yet to hear of.

Shipping with Fabric is full GitHub integration support for Power BI datasets and reports, which means we FINALLY get legit enterprise ready source control for Power BI. No more hacking our way through it with storing the binary pbix files in a repository. Multiple developers can collaborate on the same artifacts.

And what’s this about “no refresh?” Since all of the workloads in Fabric, run on top of OneLake, there is no need to “refresh” or “import” data again into any of these systems. We can just get to work. Of course, we still need to import or in some cases simply link (what Fabric calls a shortcut) the data into Fabric, but those second, third, and so on steps to move that data into multiple artifacts is no longer required.

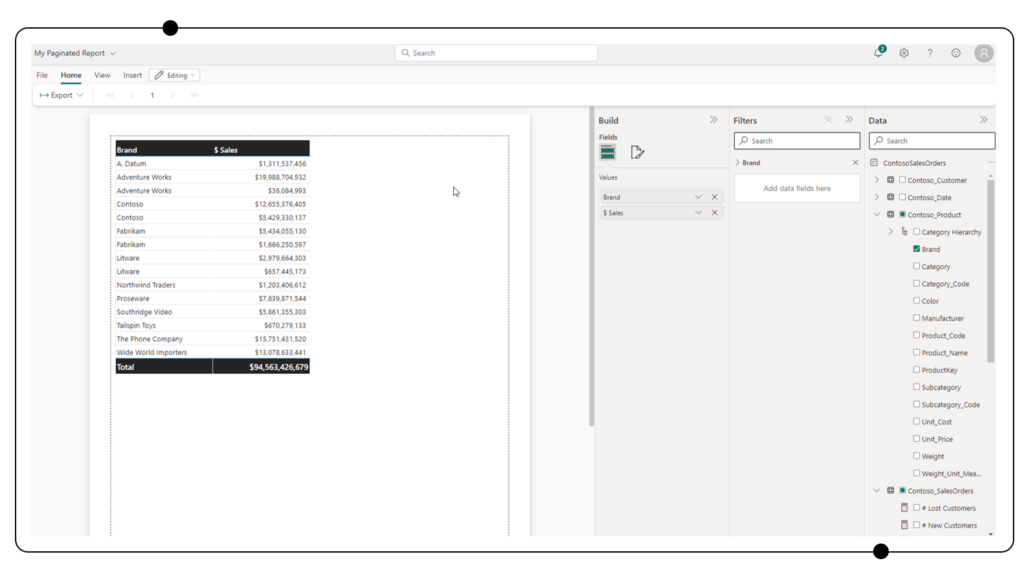

Another example: paginated reporting gets easier. Creating simple paginated reports used to be, well, anything but simple. Fabric delivers an incredibly easy to use interface for creating reports using your OneLake data, and distributing them as PDFs, Excel files, and more. For a huge number of use cases, we can say goodbye to separate desktop applications and additional data structures, movement, and refresh to deliver these tools.

OneSecurity is still on the Roadmap to come to preview, but it will enable us to secure the data once in OneLake and that will flow to every place that data is accessed.

Before Microsoft Fabric, if we defined security in something like a SQL Database, we would need to effectively redefine that same security into concepts like workspace access, dataset permissions, row-level security, and object-level security. All of these have different capabilities and constraints, making end-to-end security not just complex but sometimes not possible. OneSecurity will enable us to define security once, in OneLake, which will then propagate to all workloads in Microsoft Fabric. Pretty cool.

Overall, we advise “don’t panic, but fortune favors the prepared.” At a high level, probably the biggest “risk” is just “lost upside” as opposed to “potential downside” – so not the traditional meaning of the word “risk.”

That said, Microsoft is clearly all-in on The Fabric Way, so this new paradigm is also going to be hoovering up most of their engineering resources. We don’t expect “the old way” to break, of course, because they’re not irresponsible, but lots of new stuff will probably only work on the new paradigm.

We’ve seen this before from Microsoft. Power BI mobile device support, for instance, was only made available in the cloud (back when 95% of adoption was on-prem servers). And SSAS Tabular got all kinds of cool features that SSAS MD (the forerunner to Power BI) technically could have had – but didn’t.

So, there IS a risk – actually more of a certainty – of something super compelling becoming available, your stakeholders demanding it, and your infrastructure isn’t ready to support it because it only works against the OneLake layer. Data Activator is already one example of this, and OneSecurity is already on the horizon, but there will be more. Many more.

So in short, the clearest risk is just lost upside, but “getting caught behind the stakeholder demand curve because Microsoft is focusing their new development work on OneLake” is a very real, traditional-style risk.

This is basically the same story as what we’re already used to: Power BI gateways with dataflows.

This is a great question, with a few possibilities. We think it is important to consider the shortest possible path to making Fabric available to your teams. In this scenario we could either update the Target of the existing Data Factory processes to be OneLake. However, that may not be practial if your pipelines are invoking things like Stored Procedures or other code.

Another option is to use CETAS (CREATE EXTERNAL TABLE AS SELECT) which allows us to create a parquet representation of tables in the Azure SQL Database. This is the same storage format that OneLake is based on so we can then use shortcuts to make that data available within Fabric for our users. The implementation will sound rather technical, so we’ll spare that from this response, but it is very low friction to put in place and certainly MUCH faster than having rebuilt the ELT/ETL from scratch.

Yes, that’s the concept here. Once data is available in Fabric, there is no replication or movement of data required. Since your data is originally sourced from Azure Databases, we need to satisfy making that available within Fabric by either ingesting the data to Fabric, creating shortcuts to Azure storage accounts, or using techniques like CETAS (CREATE EXTERNAL TABLE AS SELECT).

For now, Fabric’s disruption is centered around OneLake and its many benefits. Co-Pilot for Fabric (later this year) will create the types of AI-driven developer experiences you’re referring to.

Not currently in Public Preview – but we will eventually get a full desktop and developer supported experience for OneLake datasets.

There are LOTS of options for us to make data available to Fabric w/o starting over. We should talk

Fabric licenses will be purchased similarly to Power BI licenses and Power BI Premium Capacities.

That is correct. The AI/ML capabilities leverage Fabric Notebooks, which can be written in either PySpark (Python), Spark (Scala), SparkSQL, or SparkR. You may also leverage any libraries you’d like within these languages, managing them at the Workspace level, which means they are automatically imported and available to all notebooks created in that workspace!

From Rob – “Replace” is a strong word that I personally would hesitate to use, but maybe Justin would disagree. Going one level deeper on my own thoughts: to the extent that data lakes were already replacing data warehouses, I think that’s also true for Fabric/OneLake. And the data lake “advantage” over SQL-based warehouses was/is essentially this: you don’t have to think as hard about schema and design with a data lake. Data coming in can be “dumped” into a data lake with a lot less thought, in its raw form (or close to it), without fear of losing information, and without fear that the storage format will preclude you from achieving goals down the line. I think of it as “turning raw data into rectangles.” (Aka tabular schemas). Data often doesn’t “arrive” in rectangular form. (ex: XML, JSON, folders of inter related CSV’s). SQL warehousing requires you to design all of the rectangular shapes UP FRONT, and that’s both a dangerous and expensive process. The translation itself (from “curly” things like XML/JSON/CSV) risks “losing” important information that was present in the raw form. So you spend a tremendous amount of time on that process – and still get it wrong. If we could skip all that, it would be great. You always EVENTUALLY need rectangles – analysis by its very nature operates on the things that are regular about your data – but delaying that “rectangularization” until later turns out to be super smart, and OneLake gives you exactly that. Dump and store in raw form, and then extract whatever rectangles you need later. But if you already have data in SQL format, there’s not much to gain from data lake storage – except that now, if you load it into OneLake, it’s now “on the highway” that exposes your SQL-originated data to basically every single analytical service MS has to offer.

From Justin – My answer here is definitely yes, but not discounting Rob’s comments here that an actual EDW represents a rather mature state for any organization that may not be practical or necessary. There are two different SQL artifacts in Fabric, the SQL Endpoint on the Lakehouse and a SQL Data Warehouse. The former is essentially read-only with schema generated automatically from the Lakehouse. The latter is fully ACID compliant with full transaction support, and full DDL and DML functionality – built on the Synapse Infrastructure and can most certainly serve as an EDW. The storage layer underneath is still an open delta format, so you retain the full benefit of flexibility to other Fabric artifacts, as well as external platforms.

At P3 Adaptive, we want to provide you with the knowledge to empower your decisions around Microsoft Fabric adoption. Like Rob said, we will NEVER “hoard” information from our clients. The “traditional” consulting M.O. of keeping one’s clients in the dark was ALWAYS distasteful, but we believe it is now 100% out of date as well. There’s just too much opportunity in the world of data today. Informed clients make better partners, AND they make us better consultants.

As such, our goal is to provide you with a comprehensive perspective – combining the webinar and FAQ – so you understand the full picture when it comes to Microsoft Fabric.

It’s just what we do.

The webinar was a great Microsoft Fabric introduction but now it’s time to go deeper.